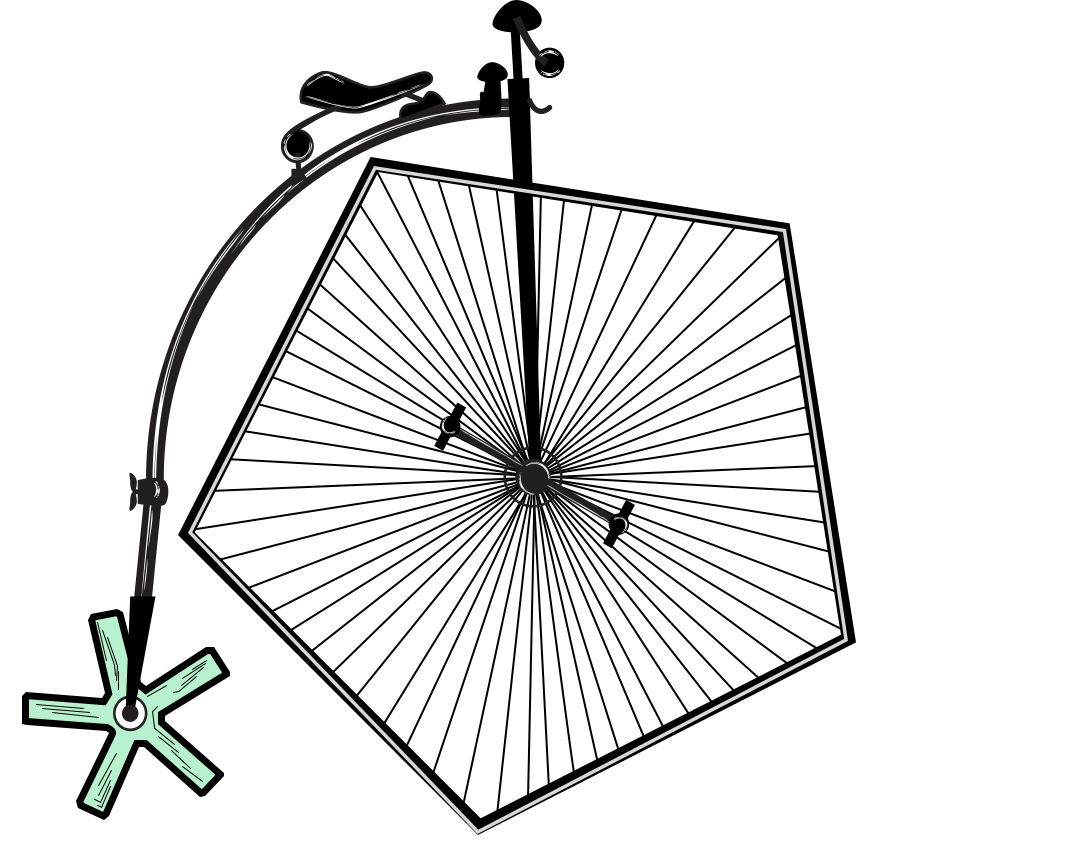

One of the oldest jokes in the world of course… so I had a little fun this morning playing around with photoshop and reinventing the wheel for this bicycle. It looks a little funny and I’m pretty sure it won’t be as smooth a ride as it could be. When I think about Audiovisual Translation this is the picture I have in my mind when I think about adding the ability to create a source text from a video that was provided without one in a CAT tool. Why do I think this? For a couple of reasons really:

One of the oldest jokes in the world of course… so I had a little fun this morning playing around with photoshop and reinventing the wheel for this bicycle. It looks a little funny and I’m pretty sure it won’t be as smooth a ride as it could be. When I think about Audiovisual Translation this is the picture I have in my mind when I think about adding the ability to create a source text from a video that was provided without one in a CAT tool. Why do I think this? For a couple of reasons really:

- A CAT (Computer Assisted Translation), or TEnT (Translation Environment Tool), is based around the premise that you have a source file already. It’s designed to help a translator create a version of the source file in a different language to the one you started with.

- Audiovisual software… to use this nice definition in wikipedia is “a type of software used to create and edit subtitles to be superimposed over, and synchronized with, video.”

Both of these tools have a tremendous number of features to help their respective users carry out their tasks as efficiently as possible. Do I think audiovisual software providers should include Translation Memory features to help audiovisual translators carry out their work from start to finish in Subtitle Edit? Not really… and neither do I think we should add the sort of features that are available in Subtitle Edit to Trados Studio anymore than I think we should embed Photoshop features so that translators can touch up non-translatable images for translation. At a very basic level this sort of thing may provide something that a few users may find useful… but you only have to look at how these tools have developed over the years to see how specialised they are. I don’t believe there is a one-stop solution to everything for translators working in this field and I think we should do the right amount of cross platform development to support what’s really sensible. It’s horses for courses!

Those of you who read the interesting toolbox journal from Jost Zetzsche may have seen the “What’s new in subtitling translation tools? (Guest article by Damián Santilli)” published this morning… which of course was the trigger for my article today. Damián mentioned that I was baffled by a looming question over what happens if you don’t have the source text during a presentation I gave over a year ago when we released the initial version of the Studio subtitling plugin. Well… I don’t recall being “baffled”, but as I think about it this morning I guess I actually am! This would be because it feels like a step too far for a translation tool because we’ll never do it well enough!

As we created the SDL appstore solution we worked with many professional audiovisual translators who pretty much felt that a CAT tool would never be able to provide the features required by a professional to do their work. So we set about looking at what would be required to convince a professional that we could do this, and in doing so we became more aware of just how specialised Audiovisual translation is, and of which features would be needed to make the plugin useful enough for a professional to be able to do their translation work. It’s not trivial, we still have things to do, but I think we are a long way towards having a useful solution that already goes a long way beyond the capabilities of any other CAT tool vendor.

- more file type support

- ability to edit the time codes

- automatic insertion of gaps between frames

- keyboard controls

- wave form support

- etc.

This is probably still not enough for everyone and we will enhance this further as needed to ensure an audiovisual translator can get the most out of using their preferred translation tool for this kind of work.

It’s a shame Damián could only refer to the webinar we presented so long ago… we actually delivered one last week which you can watch here if you’re interested and this will show you just how far we have come since then… but we still don’t create subtitle source files and I still doubt we will reinvent the wheel and deliver a solution to do this.

I do think we’ll see more integrations in the future as part of a workflow allowing companies to manage the creation of subtitles and subsequent translation into multiple languages, but this will be using APIs rather than doing a poor reinvention of the wheel. There was a recent press release from SDL who are doing this already with Canon. This type of thing is only possible with specialised software used by professionals in their field. So I think CAT tool vendors need to stick to what they’re best at and provide features that are really going to be useful for translators handling subtitling files for translation that have already been created professionally taking into consideration all the things that make good subtitles.

I have loaded the presentation from last week to slideshare so if you fancy a quick overview without sitting through an hour of listening to me this might be more useful:

Very interesting showcase. But why does it come that the reality (translating .srt files) *still* looks completely different? Can you explain why project setup is following the concept that long sentences *must* spread across 5 or even more segments and every segment *must* contain several returns (either hard and soft)? Very sad side effect: TM leverage is close to zero – even when content is almost identical in repetition. These technical conditions of .srt translation are remaining unchanged since 2014. Do you know about any upcoming changes? Client could save a ton of money. ?

Otherwise a very nice blog and highly appreciated on topic reports. Many thanks for your ongoing work. Best regards from Germany.

Thanks Christian, I’d love to have this discussion in the SDL subtitling community forum because it’ll be far easier to share screenshots and discuss things with a wider audience who may have experience, and/or an opinion this.

I guess part of the problem is that the sentence itself, in these cases, is spread across several subtitles and as each subtitle sits within its own timeframe they need to be separate segments. Otherwise you cannot check whether each one complies with the requirements for subtitling in the first place. Even if you could join them, in a virtual way, purely for some requirement to have complete sentences in a TM you’d still need to edit the individual segments to comply with the requirements of each subtitle. Notwithstanding this, I’m wondering why TM leverage would be so low when surely each repetition would have the same constraints and be segmented in a similar way?

But this is why I think a discussion in the community would be more beneficial as it’s easier to show examples and discuss sensibly. Who knows… perhaps we’ll come up with a solution together that makes sense. We have thought about this a little and wondered about having virtual segments for leverage, but for the reasons I just mentioned we couldn’t see the real value in this. So definitely looking forward to discussing.

Many thanks for your swift reply, Paul. It was a pleasure for me to read *exactly* from you what the problem is all about. The segmentation of long sentences in these .srt files is following a specific routine – made up by the person who is in charge of listening to the video whilst performing the transfer into editable text.

Unfortunately, this process is a *given fact* and cannot be changed into something else later during translation, whilst exchanging the files with the client via the SDL Translation Management System. As being a part of this global network, I am not allowed to change the source file (or the target file) for a better outcome (in the interest of the customer, of course) and better TM leverage.

Having provided you with some keywords of the internal SDL Workflow, you will probably understand that I am not able (and allowed) to share more information. Many thanks for your understanding. Best regards from Christian Kuschner